In a recent blog, we laid out five compelling reasons why delaying upgrades is a bad idea. From mounting security risks to rising cloud support fees and forced upgrades, the costs of falling behind are real and measurable.

And yet... we still delay them.

This post is about the why. Not the idealized, rational why. The real why. The human, emotional, political, and logistical friction that makes upgrades one of the most postponed tasks in engineering.

These aren’t excuses. They’re real tradeoffs. And if we want to make upgrades easier and more reliable, we have to name what’s actually getting in the way.

Let’s get into it.

This is the one no one really wants to admit out loud, but it's true everywhere. When you're shipping for customers, internal upgrades fall to the bottom of the list. Especially in smaller teams where the same people own both product and platform.

It’s not that people don’t care. It’s just that time is finite. If you have to choose between unblocking a revenue-generating feature or upgrading some add-on that’s "still working," the decision is obvious. But those short-term gains often create long-term risks and compounding challenges.

Even in bigger orgs with dedicated platform teams, upgrades get pushed off because they feel risky. Not because we don’t know how to do them, but because we’ve seen what happens when they go wrong.

A single misstep can cause downtime. That downtime can trigger postmortems, Slack threads, and finger-pointing. Nobody wants to be the person who approved the change that broke production, even if the fix was technically sound.

The risk of breaking something now feels more real than the risk of running outdated software later. So we wait.

One of the most overlooked reasons upgrades get delayed isn’t the code. It’s the coordination. Upgrading a cluster often requires collaboration across multiple teams: platform, app owners, security, compliance. Everyone’s on a slightly different schedule with different goals.

If something goes wrong, whose fault is it? Who owns the recovery? Who notifies which team?

Upgrades without clear ownership or process create interpersonal tension. And people avoid tension, even if it's subtle. So instead of pushing forward, teams quietly pause.

Take something that sounds straightforward: moving from cgroup v1 to v2. On paper, it’s a kernel update. In practice, it quickly becomes a company-wide mega project. Some services still rely on old system paths. Others might be calling deprecated interfaces directly. Before making the switch, someone has to figure out:

That’s not just a technical checklist. It’s coordination. It’s cross-team planning. It’s change management.

And for many teams, the work required to get everyone aligned feels harder and riskier than just sticking with the old version a little longer. So the upgrade waits.

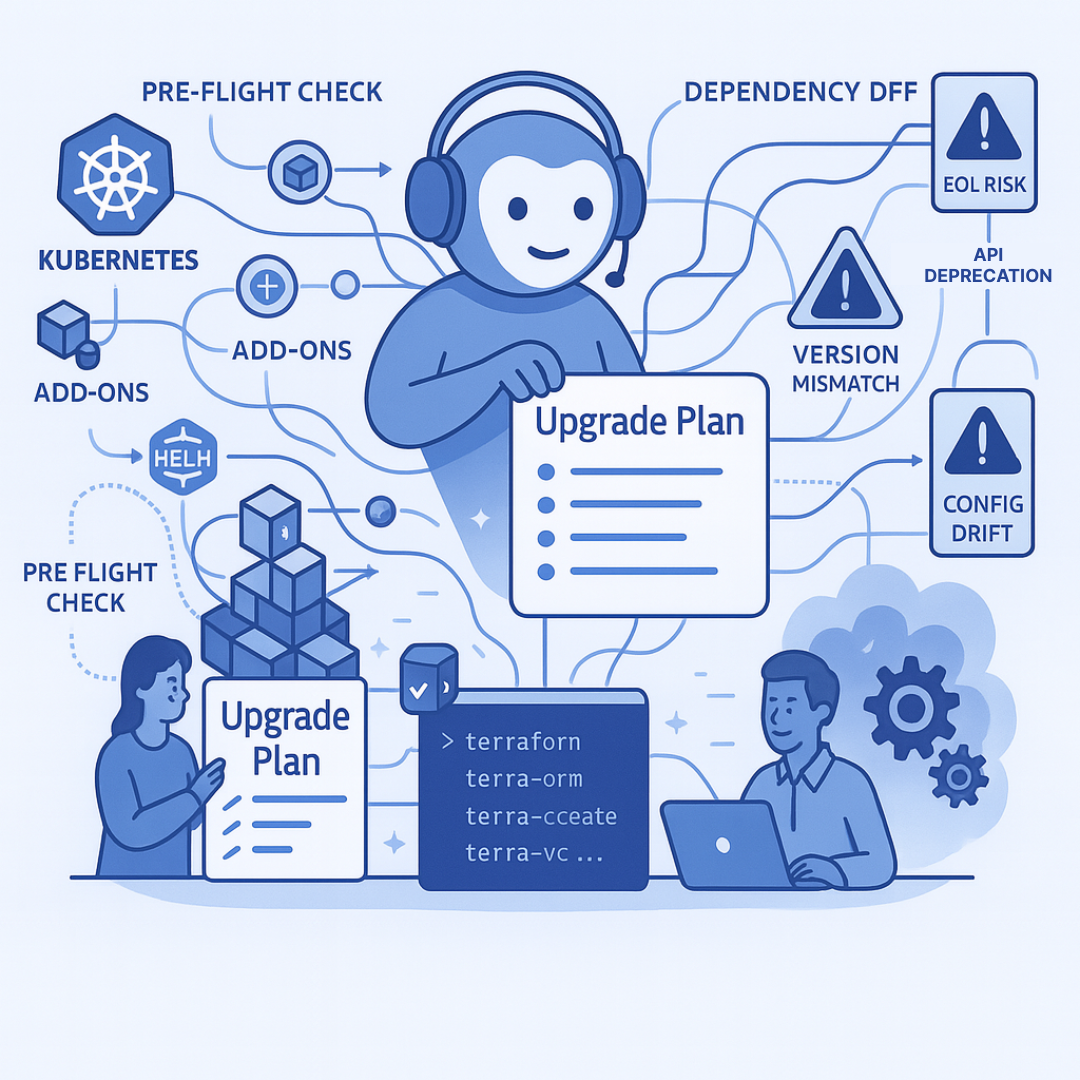

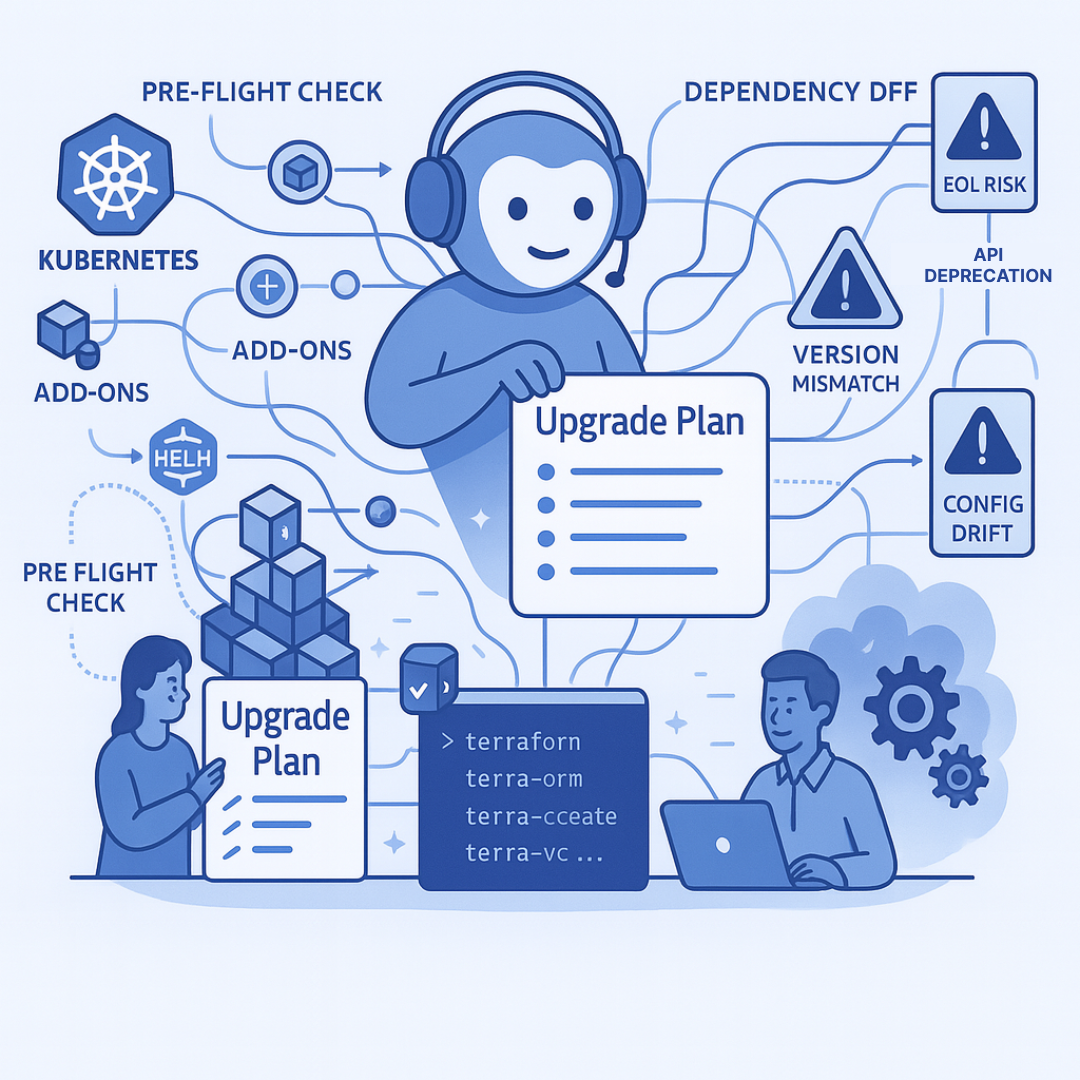

Upgrading operational software isn’t a single task. It’s a chain of tasks: control plane, nodes, add-ons, configurations, Helm charts, deployment tools, CI/CD pipelines, API clients. All with their own versions, timelines, and quirks.

And often, the official docs don’t have everything you need. Release notes are vague. Forums are outdated. You end up testing things in staging, hitting random errors, and losing hours tracking down a root cause that wasn’t documented anywhere.

For example, take Istio’s own tooling. The in-cluster Istio Operator was removed in version 1.24, so teams must migrate every existing Istio Operator custom resource into Helm charts before upgrading. That migration isn’t just about running a command. It involves updating labels and annotations, verifying that all settings still apply under Helm, and testing the new installation path. Suddenly, an upgrade that should deliver new features and security fixes turns into a multi-month research project, taking much longer than it would if everything were laid out clearly upfront.

It’s not that engineers don’t want to upgrade. It’s that they’ve been burned by how unclear and fragmented the process is.

Sometimes there’s exactly one person on the team who knows how to perform a complex upgrade. They’ve done it before, they know the traps, and they’ve got all the tribal knowledge in their head. If that person is busy or on leave, the upgrade waits.

We talk a lot about standardization, but in practice, upgrades often depend on individuals. That’s not sustainable. And it makes every upgrade slower, harder to delegate, and more likely to be deferred.

Even motivated teams hit a wall when it comes to figuring out what should be upgraded, in what order, and how to do it safely.

It’s one thing to be told "you’re using deprecated APIs." It’s another to get a clear plan that says: here’s the exact change to make, here’s why it matters, and here’s what to test after.

Without that, the safest move is to do nothing. Or at least wait until there’s a fire.

In teams that upgrade regularly, a few patterns show up:

MOST IMPORTANTLY, their leaders recognize the pain, invest in tooling, and create space for maintenance work to be seen as valuable. Not invisible toil, but real engineering.

Delays aren’t always caused by apathy or ignorance. They’re the result of tradeoffs, fear, confusion, and the absence of clear paths forward.

If we want timely upgrades, we need to design for the human side of the process too.

Because what delays upgrades isn’t a lack of importance. It’s friction. And friction is something leaders can fix, if we identify the pains, empathize with the engineers, and invest in tooling to simplify and automate the process.